When the dust after e-Spark summer school didn’t yet have quite a time to settle on the lab benches, Steven and Emilia had already packed their bags and posters and traveled to Kraków to another summer school this time about Machine Learning. From the 26th of June to the 2nd of July, they heard plenty about priors and posteriors, what can be calculated, what only approximated, and at what cost, and which neural networks can represent molecules and which cannot.

Emilia did more than 70 pages of notes, including countless references to articles pointed out by the speakers, so another week will likely be necessary to digest all that was said during school. In general, Steven and Emilia enjoyed more lectures given by physicists and chemists that ventured into computer science than core computer scientists, maybe that will change when they dive deeper into the world of AI.

Lectures can be seen on the streaming YT channel of the conference or you can search for them on the main YT channel of the organizer ML in PL Association .

We would like to specially recommend the following lectures:

All Monday lectures were great, but if you need to pick one, go for 15:30 – 17:30 Matej Balog Algorithm discovery using reinforcement learning, during which you will learn how to make anything into a game and teach the computer how to find better and discover completely new mathematical algorithms.

On Tuesday, the best was the 15:30 – 17:00 lecture by Thea Aarrestad, Unlocking the Secrets of the Universe: Accelerating Discovery with Machine Learning at CERN, who talked about how much and how often data is generated at CERN and what restrictions it implies on the data analysis, storage and again analysis. Not to mention the hardware that, in some cases, has to withstand quite a lot of radiation… just WOW. Our lab mates had the chance to meet Thea the previous afternoon when they discussed the best strategies for being less antisocial and finally talking to someone on the summer school when Thea saved them from the delibarations by joining their table.

On Wednesday Prof. Anna Gambin gave some valuable ideas for Steven’s simulations, presenting Magnetstein: a universal tool for complex mixture analysis in NMR spectroscopy, which can analyze data from different instruments taken with different resolutions. Maybe the Wasserstein approach to optimized transport could also be used in Steven’s program to analyze data from different potentiostats taken with different step size and alpha value? Not less interesting was the other example, in which a Mass Spec database was created by joining simulated and experimental data to populate the vast network of possibilities. The idea again sounds better than performing Cv’s and DPV’s in all possible temperatures, concentrations, scan rates, etc.

Also, on Wednesday from 11:00 – 12:30, Yarin Gal’s lecture on Uncertainty in Deep Learning raised an exciting subject of aleatoric (captures noise inherent to the data) and epistemic (data in some range is missing) uncertainty and how they can be analyzed. In the case of Epistemic uncertainty, models could even suggest regions where samples should be added.

Friday was again an excellent day for lectures, but the second half of the day, led by Mario Krenn, was incredibly inspiring, with AI shown as a source of … inspiration. We learned how the data could be translated into other senses to be easier to interpret. If you want to try it yourself, go hunting some black holes (better with headphones). Similarly, as we heard from Matej Balog and could read on Emilia’s poster, AI can also extract some patterns and mathematical formulas describing the data, which we mere mortals could not discern. Last but not least, it was shown how ML methods can help us explore the vast experimental space and propose new experimental setups or new collaborations, including the topics. Excellent lecture, with the perfect balance of when ML makes sense and when it does not. This was for example illustrated by a Science4Cast AI competition in which a participant using simple statistics got much better results than the original neural network.

On Saturday afternoon Michael Bronstein gave a great tutorial on different types of graph neural networks, and if you are interested in image analysis or maybe need some inspiration for other fields, watch Bartosz Zieliński’s lecture about the usage of Prototypical Parts.

Emilia and Steven also had their chance to share some science with Tuesday’s poster session, on which Emilia talked about “Underrated applications of PCA loadings: refinement of sensor array composition and analysis of post-translational protein modifications” and Steven presented “Applications of machine learning methods towards the analysis and prediction of mechanistic properties in electrochemistry.”

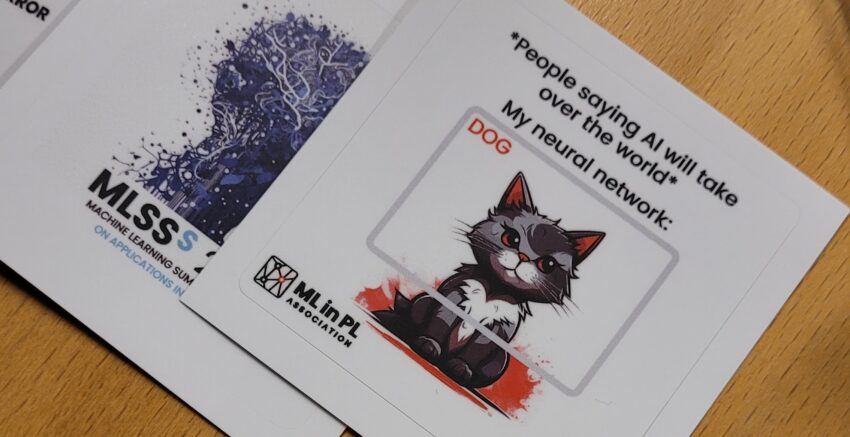

Wow, what a busy week. After something so intense, you start seeing Machine Learning everywhere… even strolling around Warsaw in search of a grocery store 🙂

We will surely miss the great campus, with swings in the corridor, plants, sculptures, cafeteria with super tasty food. They even have a natural history museum! and a lake nearby… Ah the jealousy…